OpenAI releases Point-E, an AI that generates 3D Models

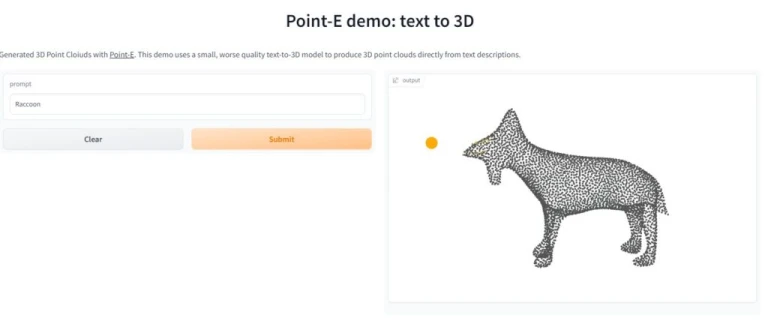

3D model generators may be the next innovation to rock the field of AI as OpenAI releases Point-E. Point-E, a machine learning system that generates a 3D object from a text prompt, was made available to the public this week by OpenAI. An article claims that Point-E can create 3D models on a single Nvidia V100 GPU in one to two minutes.

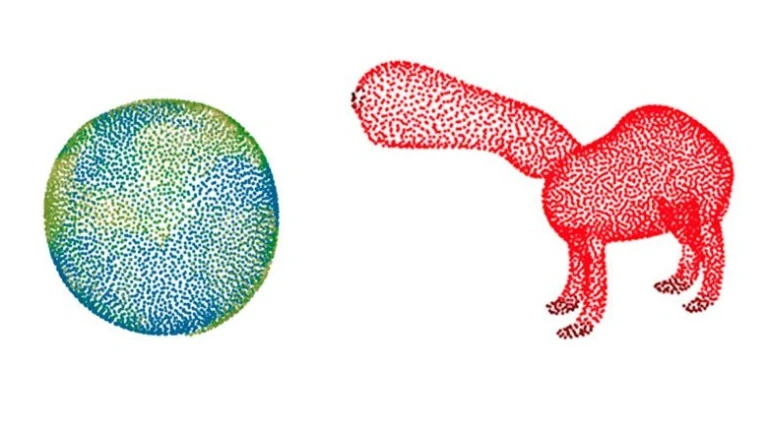

In the conventional sense, Point-E does not produce 3D objects. Instead, it creates point clouds, which are discrete collections of data points in space that reflect 3D shapes; hence, the playful abbreviation. (The “E” in Point-E stands for “efficiency,” as it purports to be quicker than earlier 3D object production techniques.)

OpenAI releases Point-E

Point-E is made up of two models: a text-to-image model and an image-to-3D model, in addition to the mesh-generating model, which is a standalone model. The text-to-image model was trained on tagged images to comprehend the relationships between words and visual concepts. This is like the generative art systems like OpenAI’s own DALL-E 2 and Stable Diffusion. The image-to-3D model, on the other hand, was taught to effectively translate between the two by being fed a set of photographs coupled with 3D objects.

Point-E AI created 3D Image

Point-E AI created 3D ImageFrom a computational perspective, point clouds are simpler to create. However, they are currently a major drawback of Point-E because they cannot capture an object’s fine-grained shape or texture.

The Point-E team trained an additional AI system to transform Point-point E’s clouds to meshes in order to get around this restriction. In 3D modeling and design, meshes collections of vertices, edges, and faces are frequently used to define objects. However, they make a point in the report that the model occasionally misses specific item details, resulting in blocky or deformed shapes.

Applications of Point-E

The point clouds created by Point-E might be used to create actual items, for instance through 3D printing. Once it’s a little more refined, the system might also find use in processes for game and animation production. This is thanks to the addition of the mesh-converting model.

OpenAI releases Point-E

OpenAI releases Point-EAlthough it may be the most recent business to enter the 3D object generation market, OpenAI is by no means the first, as was previously said. A more developed version of Dream Fields, a generative 3D technology that Google revealed in 2021, was released earlier this year under the name DreamFusion. DreamFusion, in contrast to Dream Fields, doesn’t need any prior training. Therefore it can create 3D models of objects without 3D data.

Issue of Intellectual Property Disputes

What kinds of intellectual property issues might eventually occur is the question. There is a sizable market for 3D models, and artists can sell their original work on multiple online marketplaces. These include CGStudio and CreativeMarket. Model artists may object if Point-E is successful and its models are released on the market. Subsequently, citing evidence that contemporary generative AI heavily draws from its training data, in this example, existing 3D models. Point-E, like DALL-E 2, does not mention or give credit to any of the artists who might have had an impact on its generations.

But OpenAI will save that topic for another day. The GitHub page and the Point-E document both make no mention of copyright.

To their credit, the researchers do acknowledge that they anticipate Point-E to have other issues. These include biases inherited from the training data and a lack of protections for models that might be exploited. It’s possible that this is the reason they are careful to describe Point-E as a “beginning point”. It is hoped that it would motivate “additional study” in the area of text-to-3D synthesis.

Follow us on Instagram: @niftyzone